I thought I was going to get out in front of all the ChatGPT for GIS discussion for the New York State GIS community, but that turned out to be off by a magnitude of sorts. YouTube is already replete with ChatGPT for GIS tutorials and if we wait until next week there will probably be another ChatGPT alternative. (I found the Matt Forrest YouTube video a good ChatGPT for GIS intro – actually formed a question after one of his examples). Even Amazon jumped into the frey recently with their Amazon Bedrock option. Take your pick, Bing Chat, Chatsonic, YouChat, Google Bard (available via a waiting list) and a whole host of others on the market in 2023.

No, the robots aren’t taking over and I suspect we won’t see any of the early Terminator Cyberdyne Systems androids applying for your local GIS Analyst position anytime soon – though it might be a solution for those long dreadful digitizing jobs in the cart lab. One way or another, Chatbot AI technology is definitely here to stay, evolve, and become another piece of the geospatial toolbox.

Nothing specific or implied in any the questions. Just random chains of thought though it does take a little getting used to putting the questions together vs. a basic Google search. And it does seem to help to refresh and ask the same question twice – or even three times – to get more detailed results. Never was a programmer, so a little short on questions in that space.

A lot here. Actually 17 questions in all. Straight copy and paste. Skim through as you want – find a topic/question you might be interested in. There are tons more which could have been included.

Without any further adieu, my first machine interview:

eSpatiallyNewYork: What freshwater fish datasets are best for mapping fish population based on water depth in the Great Lakes?

ChatGPT: There are several freshwater fish datasets that can be useful for mapping fish populations based on water depth in the Great Lakes. Here are a few options:

- Great Lakes Acoustic Telemetry Observation System (GLATOS) – This dataset provide information on the movements and behaviors of tagged fish in the Great Lakes. It includes data on depth, temperature, and location, which can be used to create maps of fish populations based on water depth.

- Great Lakes Fishery Commission (GLFC) – The GLFC maintains a database of fish populations in the Great Lakes. This dataset includes information on fish specifies, size, and location, which can be used to map fish population based on water depth.

- Great Lakes Environmental Database (GLENDA) – GLENDA is a comprehensive database of environmental data for the Great Lake region. It includes information on water depth, temperature, and environmental factors that can be used to map fish populations.

- USGS Great Lakes Science Center – The USGS Great Lakes Science Center conducts research on fish populations in the Great Lake and maintains a database of fish surveys. This dataset includes information on fish species, abundance, and location which can be used to map fish populations based on water depth.

Ultimately, the best freshwater fish dataset for mapping fish populations based on water depth in the Great Lakes will depend on the specific research question and the type of analysis being conducted.

eSpatiallyNewYork: To what degree of accuracy can I map features from New York State orthophotography?

ChatGPT: The degree of accuracy with which you can map features from New York State orthophotography depends on various factors such as the resolution of the imagery, the accuracy of the ground control points used for georeferencing, and the skill and expertise of the analyst.

New York State orthophotography typically has a resolution ranging from 0.5 to 1 meter, which means that individual features such as buildings, roads, and other infrastructure can be identified and mapped with a reasonable level of accuracy. However, mapping smaller features such as individual trees, shrubs, or small structures may be more challenging, as these features may not be visible at this resolution.

The accuracy of mapping also depends on the quality of the georeferencing. The accuracy of the ground control points used for georeferencing should be of a similar or higher resolution than the orthophotography itself. This ensures that the imagery is correctly aligned with the ground, allowing for accurate measurements and mapping.

The skill and expertise of the analyst are also critical factors in the accuracy of mapping features from orthophotography. A trained analyst with experience in image interpretation and feature extraction techniques can accurately identify and map features with a high degree of accuracy.

Overall, mapping features from New York State orthophotography can provide a reasonable degree of accuracy for larger features such as buildings, roads, and other infrastructure. However, mapping smaller features may require higher resolution imagery or other sources of data. It is always important to validate and verify the accuracy of the mapping results through ground truthing and other quality control measures.

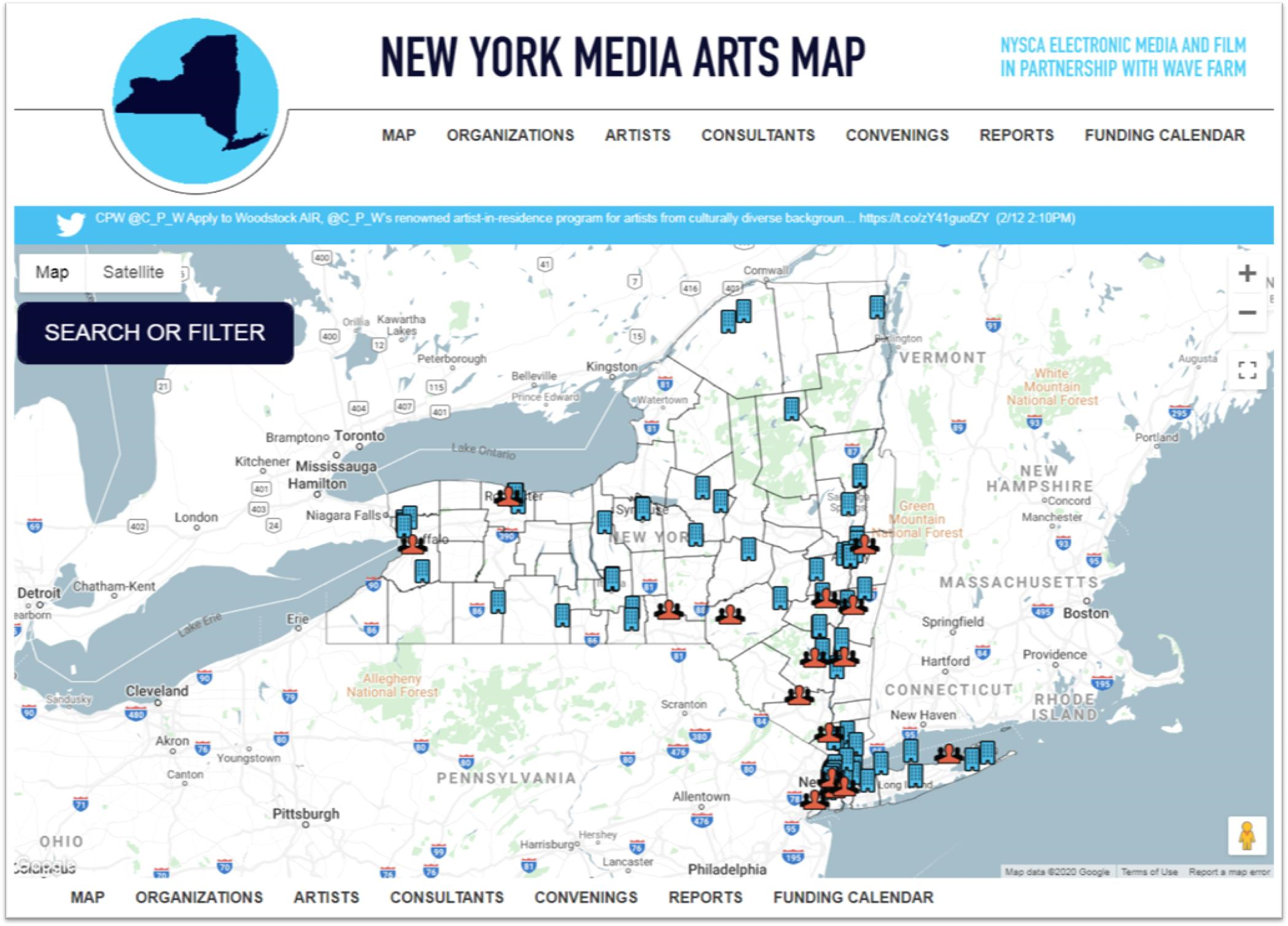

A lot of great geospatial projects and content are coming out of the

A lot of great geospatial projects and content are coming out of the

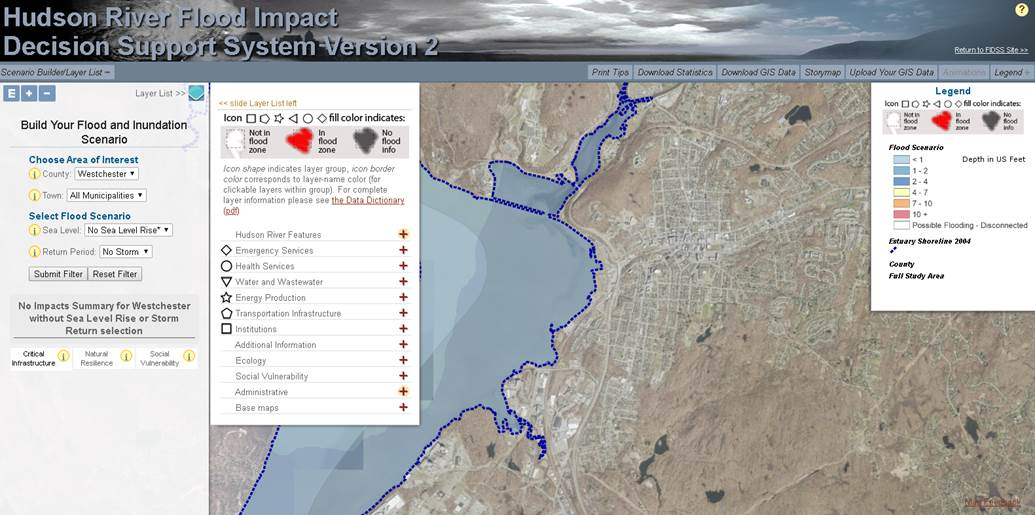

The report was published by the Harvestworks Digital Media Arts Center and

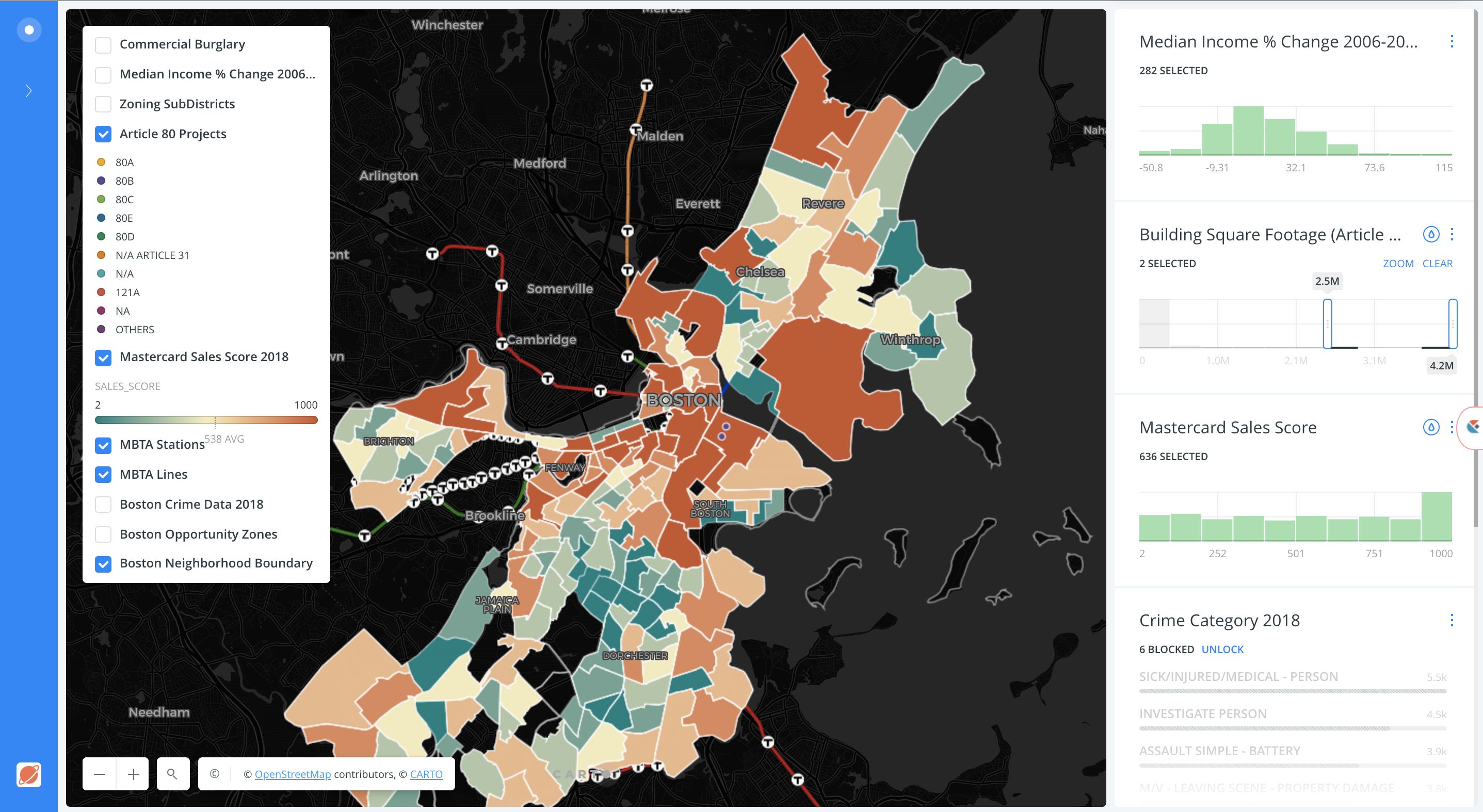

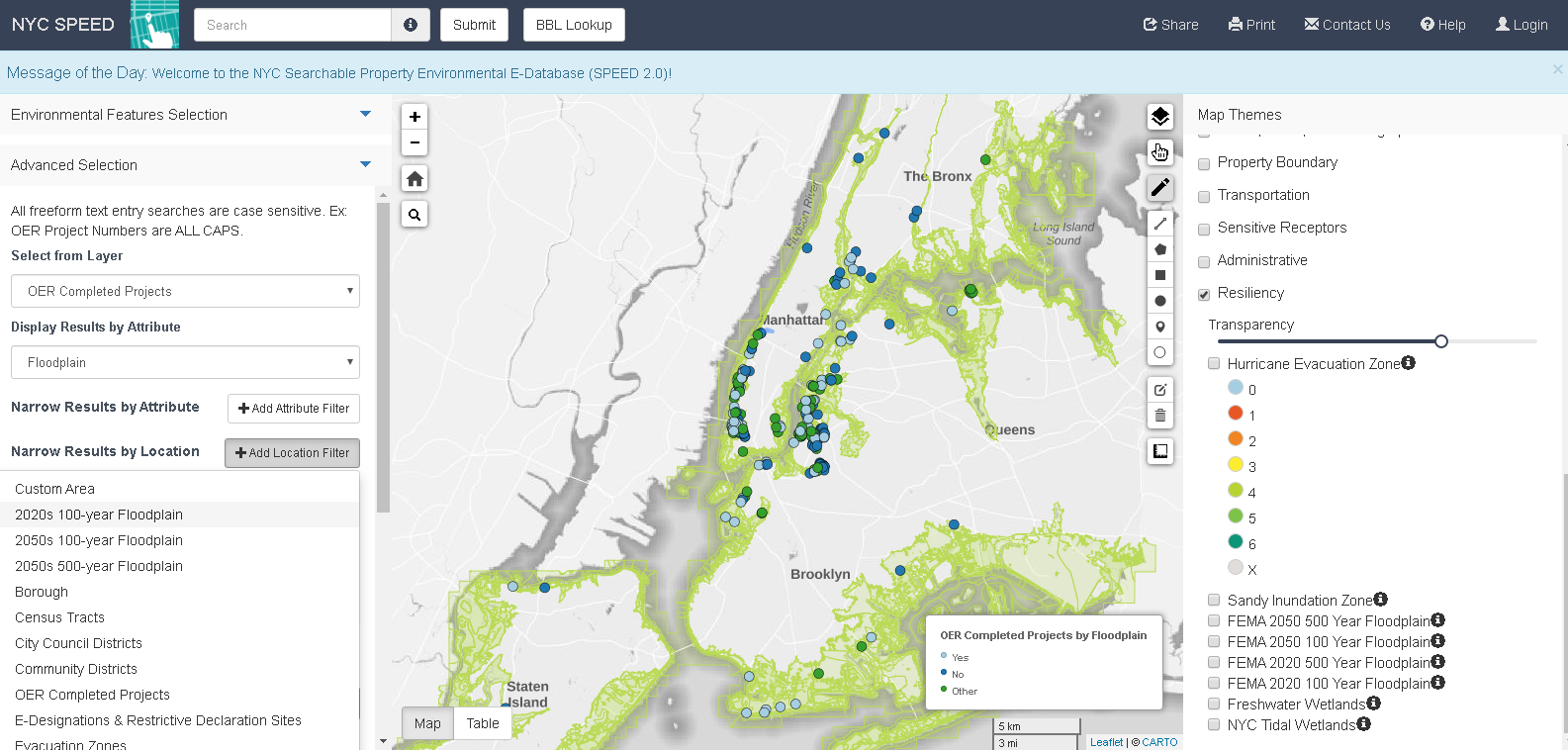

The report was published by the Harvestworks Digital Media Arts Center and  Founded by Javier de la Torre, CARTO is a diverse and expanding company which includes data scientists, geospatial analysts, cartographers, software developers and engineers, visualization experts, and web designers focusing on Location Intelligence. Most recently in May 2019, CARTO expanded its worldwide professional service portfolio offerings by

Founded by Javier de la Torre, CARTO is a diverse and expanding company which includes data scientists, geospatial analysts, cartographers, software developers and engineers, visualization experts, and web designers focusing on Location Intelligence. Most recently in May 2019, CARTO expanded its worldwide professional service portfolio offerings by